Google’s AI innovation never sleeps, and the latest offering is the “Gemini 2.0 Flash Experimental” model. This isn’t just another incremental update; it’s a glimpse into the future of highly efficient and accessible AI. If you’re eager to get your hands on the cutting edge and experience blazing-fast inference, this post will guide you through accessing, understanding, and using this exciting new model.

GEMMA 3 vs GEMINI 2.0 Flash: Which LLM is Right for You?

What is Gemini 2.0 Flash Experimental?

Building on the existing Gemini 2.0 Flash, the “Experimental” version is designed to push the boundaries of speed and responsiveness. While details are still emerging, here’s what we know so far:

- Focus on Extreme Efficiency: The core goal is to achieve even faster response times and lower computational costs compared to the standard Gemini 2.0 Flash. This makes it ideal for real-time applications and resource-constrained environments.

- Experimental Features: The “Experimental” designation suggests Google is testing new techniques and optimizations. This may include novel model architectures, quantization methods, or advanced caching strategies.

- Potential Trade-offs: As with any experimental model, there might be trade-offs in terms of accuracy or overall capabilities compared to more established models. Google is likely collecting data and feedback to optimize these aspects.

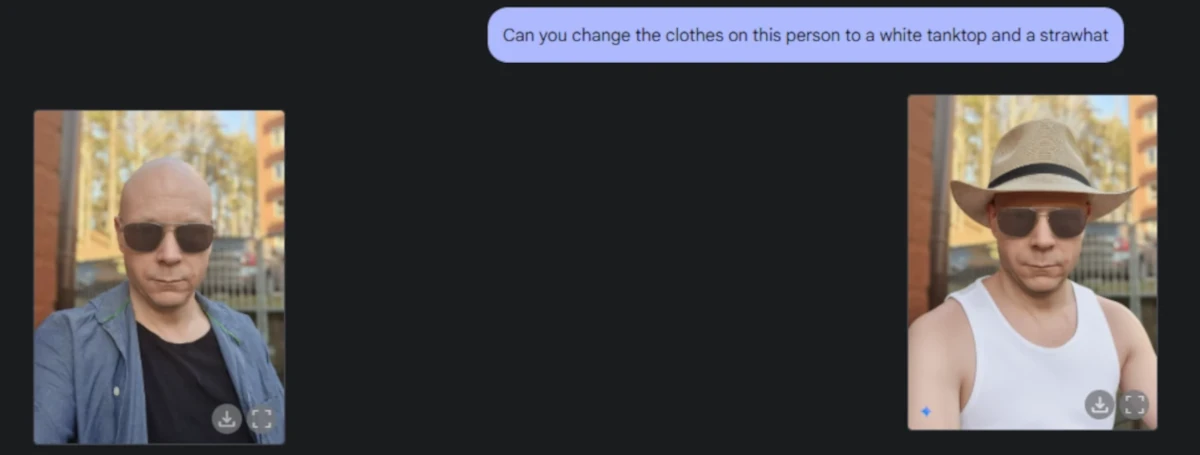

- Image Alteration via Text: The biggest buzz surrounds the capability to provide text instructions that directly modify images. Think “add a sunset,” “make the sky bluer,” or “turn the car red” – all through simple text commands.

Examples:

Anime Into Photo, Or Your Photo Into Anime: Unleash the Power of AI!

How to Access Gemini 2.0 Flash Experimental

Accessing the experimental model will depend on Google’s specific rollout strategy. However, here are the most likely access methods:

- Google AI Studio (Recommended): This is often the primary platform for experimenting with Google’s latest AI models. Keep an eye on Google AI Studio for announcements and instructions on how to enable access to the “Experimental” model. You’ll likely need a Google account.

- Vertex AI: If you’re using Google Cloud’s Vertex AI platform, check the model garden for the availability of Gemini 2.0 Flash Experimental. You might need to enroll in a specific program or request access.

- API Access (Likely, but may require waitlist): Google will potentially offer API access to developers who want to integrate the experimental model into their applications. This might involve signing up for a waitlist or meeting specific usage requirements. This is especially relevant if you plan to utilize the image alteration features programmatically.

Step-by-Step Access Guide (Example Using Google AI Studio):

(Note: These steps are based on typical Google AI Studio procedures and might vary depending on the specific rollout).

- Log in to Google AI Studio: Go to https://ai.google.dev/ and log in with your Google account.

- Check for Announcements: Look for any announcements or banners regarding Gemini 2.0 Flash Experimental, especially anything mentioning image editing capabilities.

- Select the Model: In the model selection dropdown, look for a variant specifically labeled “Gemini 2.0 Flash Experimental” (or a similar name).

- Enable API Access (If Required): If you plan to use the API, you might need to enable it in your Google Cloud project and set up the necessary credentials.

- Start Experimenting! Follow the documentation and examples to start using the model, paying close attention to any image editing specific features.

New Features and Capabilities (What to Expect)

While the specific features will vary, here are some potential areas of improvement in the Experimental model:

- Lower Latency: Expect even faster response times, potentially enabling new types of real-time applications.

- Reduced Cost: The optimizations could lead to lower inference costs, making the model more accessible for a wider range of users.

- Enhanced Quantization: The model might use more aggressive quantization techniques to reduce its size and improve performance. This could involve using smaller data types to represent the model’s parameters.

- Optimized Caching: Advanced caching mechanisms might be implemented to store frequently accessed data and reduce the need for repeated computations.

- New API Endpoints: There might be new API endpoints or parameters to control the model’s behavior and access its experimental features.

- Text-Guided Image Editing:This is the headline feature! The ability to alter images by providing text instructions. This might include:

- Style Transfer: Changing the style of an image (e.g., “make it look like a Van Gogh painting”).

- Object Manipulation: Adding, removing, or modifying objects in the image (e.g., “add a cat,” “remove the car,” “change the color of the shirt”).

- Scene Modification: Altering the overall scene (e.g., “add a sunset,” “make it snow”).

- Image Enhancement: Improving the quality of the image (e.g., “sharpen the image,” “increase the contrast”).

Key Tips for Experimenting with Image Editing:

- Start with Clear and Concise Prompts: The more specific your instructions, the better the results.

- Experiment with Different Styles: Try different styles and artistic techniques to see what the model can create.

- Use High-Quality Images: The quality of the input image will affect the quality of the output.

- Be Patient: Image editing can be computationally intensive, so it might take some time to generate the results.

- Iterate and Refine: Don’t be afraid to experiment with different prompts and settings to achieve the desired outcome.

Conclusion: A Promising Step Forward… and into Image Manipulation!

The Gemini 2.0 Flash Experimental model represents a significant step forward in the pursuit of faster and more efficient AI and opens exciting new doors for creative image editing. By exploring its capabilities and providing feedback, you can play a role in shaping the future of this groundbreaking technology. Keep an eye on Google’s official channels for announcements and updates on how to access and use this groundbreaking model.