The Regulatory Maze and the Unseen Architects

Throughout this series, we’ve dissected the often-performative dance of politicians grappling with artificial intelligence. We’ve explored why traditional top-down legislation struggles to keep pace with AI’s exponential evolution (Part 1), the profound gap between public perception and AI’s true capabilities (Part 2), the often-sufficient nature of existing laws to address AI-perpetrated harms (Part 3), and the urgent need to shift our societal focus from futile control attempts to building human resilience through education (Part 4).

But as governments deliberate and debate, a quieter, yet arguably more potent, form of “regulation” is already in full swing, operating largely beyond the direct approval or disapproval of any single nation-state. This concluding part pulls back the curtain on these powerful, private gatekeepers – the corporations that, through their control of essential digital infrastructure, are already setting de facto global standards for AI development and deployment. While politicians posture, these entities act, shaping the AI landscape in ways few truly comprehend.

The New Sheriffs in Town: Identifying AI’s Private Regulators

When we think of regulation, we picture laws and government agencies. But in the digital age, particularly with a technology as pervasive and fast-moving as AI, the real chokepoints of control often lie with a handful of global corporations:

Payment Processors (e.g., VISA, Mastercard, Stripe, PayPal): The lifeblood of any online service is its ability to transact. These financial giants, through their Acceptable Use Policies (AUPs), dictate what kinds of businesses and content they will facilitate payments for, effectively holding veto power over entire business models.

App Stores (e.g., Apple App Store, Google Play Store): For AI to reach billions via mobile devices, it must pass through the stringent review processes of these duopolies. Their decisions on what constitutes a “harmful” or “deceptive” AI app can make or break a company overnight.

Cloud Providers (e.g., AWS, Azure, Google Cloud): The immense computational power required to train and run large AI models is predominantly hosted by a few major cloud providers. Their terms of service are a fundamental dependency for nearly every major AI lab and service.

Social Media Platforms & Large Tech Companies (e.g., Meta, X, Google Search): These platforms, using their own AI for moderation, determine what AI-generated content is permissible, visible, and how it can be disseminated to vast audiences.

The “How”: A Case Study in Deplatforming

These entities wield influence not through legislation, but through their terms of service and AUPs. The ultimate enforcement mechanism is often stark: financial deplatforming. This isn’t theoretical; it’s a reality that generative AI platforms have already faced.

The CivitAI precedent is the perfect case study. In May 2025, the popular AI art platform announced that their primary payment processor was terminating their services, citing that the platform’s allowance of user-generated mature content—though legal and moderated—was outside their “comfort zone.” This was a direct, unambiguous act of “chokepoint control.” The financial gatekeeper, not a government, dictated what kind of art was acceptable by threatening the platform’s very ability to transact.

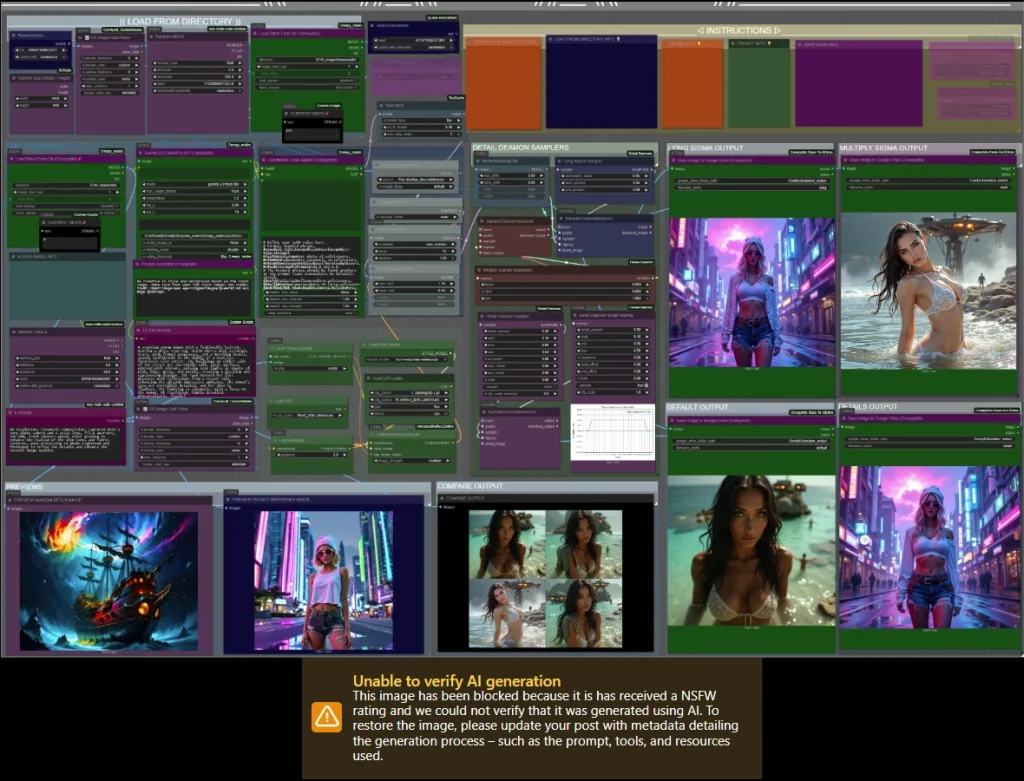

The immediate consequence was not a clean, surgical removal of prohibited content, but the deployment of a clumsy, often absurd, automated compliance machine. This system, designed to appease the financial gatekeepers, operates with a breathtaking lack of context. It has flagged screenshots of the creation workflow itself as “NSFW,” and ironically blocks stylistically obvious AI art with the justification that it “cannot verify it was generated using AI.”

For those of you who are unfamilliar with working with ComfyUI, the image you see above is called a workflow and contains the exact instructions needed to create a specific image (in this case the images shown in the workflow itself), and still the system is unable to determine whether it’s AI or not.

The process for appeal reveals the true nature of this new governance. The burden of proof is shifted entirely to the creator. I personally had an image of a fantasy “goat woman” censored, and it was only restored after I sarcastically inquired how many real-life goat women the support team was aware of. While manual overrides are possible, the official solution is to provide detailed metadata for every image. This policy creates a “censorship by attrition.” For simple creations, it’s a hurdle; for complex, multi-stage artworks, it’s an unreasonable demand, effectively silencing the platform’s most innovative artists by making the cost of compliance impossibly high.

This system isn’t designed for fairness; it’s designed for plausible deniability, protecting the platform from its financial partners at the direct expense of its creative community. The inevitable counter-maneuver, as predicted, was for Civitai to implement cryptocurrency as an alternative payment method—a deliberate move to bypass the control of traditional financial institutions. It’s a technological arms race between platforms striving for creative freedom and the centralized institutions acting as the arbiters of online culture.

Conclusion: Beyond Government Pretensions – Understanding AI’s True Governance

As we conclude this exploration, it becomes clear that the narrative of governments single-handedly “regulating AI” is an oversimplification. While politicians debate and draft laws, a powerful undercurrent of private regulation is already shaping the AI ecosystem on a global scale.

These private gatekeepers, driven by their own imperatives of risk management and brand protection, often act with a speed and directness that governments can only envy. Their rules, though unelected and often opaque, can have a more immediate and far-reaching impact on what AI is developed, deployed, and ultimately, what AI we experience.

The critical question for society, then, is not merely if or how governments will regulate AI. It is also to understand, scrutinize, and debate the immense influence of these private arbiters. To the extent that AI can be “regulated” in its current, rapidly evolving, and often open-source form, much of that effective control already lies in these non-governmental hands. The real challenge is to ensure that this de facto governance, wherever it originates, serves to genuinely mitigate harm without unjustly stifling the immense potential of this transformative technology, and to demand a level of transparency and accountability that is currently sorely lacking.

The future of AI isn’t just being coded; it’s being shaped by terms of service agreements you’ve likely never read.

Don’t forget to sign up on my newsletter, to keep yourself up to date!